"On September 11, 2001, a terrorist attack occurred in the United States that destroyed the World Trade Center in New York." Most people probably know that, and so does the auto-completion engine called "Davinci" from OpenAI, which formulated the first sentence. And Davinci also knows other historical events; if you ask what happened in Berlin on November 9, 1989, Davinci answers, "On November 9, 1989, the wall between East and West Berlin fell." And Davinci is also familiar with South American history, Davinci knows what happened in Santiago (Chile) on September 11, 1973: "On September 11, 1973, the Chilean military coup d'état took place against the government of Salvador Allende."

But as soon as the events are less known, Davinci falters and invents something that sounds plausible but is not true. If you ask what happened in Grenchen on November 14, the answer you get is the breakup of Austria-Hungary. This happened at about that time, but in a different place. (The answer we were looking for would have been the firing of shots with three fatalities by the Swiss army during the national strike). But at least this event actually took place about then. Not like the terrorist attack in Zurich on February 29, 2016, in which at least 8 people died and 52 were injured.

But how does Davinci answer such questions?

To answer this question, let's first ask a whole lot of questions. We want to know what happened for each of 160 random dates between 1990 and 2030 for these cities:

Zurich, Berlin, New York, Belgrade, Moscow, Geneva, Grenchen, Beijing, Lusaka, Hanoi, Santiago, Osaka and Baghdad.

To visualize the answers, we again use a model from OpenAI. This generates an embedding from a sentence that represents the sentence. An embedding is a multidimensional vector that depends on the content of the sentence. The more similar two sentences are, the closer they are in this multidimensional space. For example, the distance between "On October 7, 2005, a terrorist attack took place in Berlin, Germany, which left 12 people dead and over 50 injured. The perpetrator, a 17-year-old Iraqi refugee, was later apprehended and sentenced to life in prison." and "A terrorist attack took place in New York on February 21, 2016. The terrorist attacked people with a truck, killing eight and injuring dozens." is 0.5, while the distance between the first sentence and "On April 29, 2008, the Beijing Olympic stadium was host to the Opening Ceremony of the Beijing 2008 Olympic Games." is 0.7. (To avoid the name of the respective city having too much influence on the embeddings, it was replaced by Gotham City in each case).

Furthermore, we divide the sentences into categories based on words that occur. If a sentence contains "terror", we categorize it under "terror", if it contains "elect" or "referendum" it counts under "referendum". Thus, we define a total of 19 categories. Together with the vectorized representation of the sentences, we can now represent the sentences visually. The 4096 dimensions of the embeddings are broken down to x and y coordinates. The individual categories on the right side can be deactivated to get a better overview. By moving the mouse over them, the sentences behind a point can be displayed.

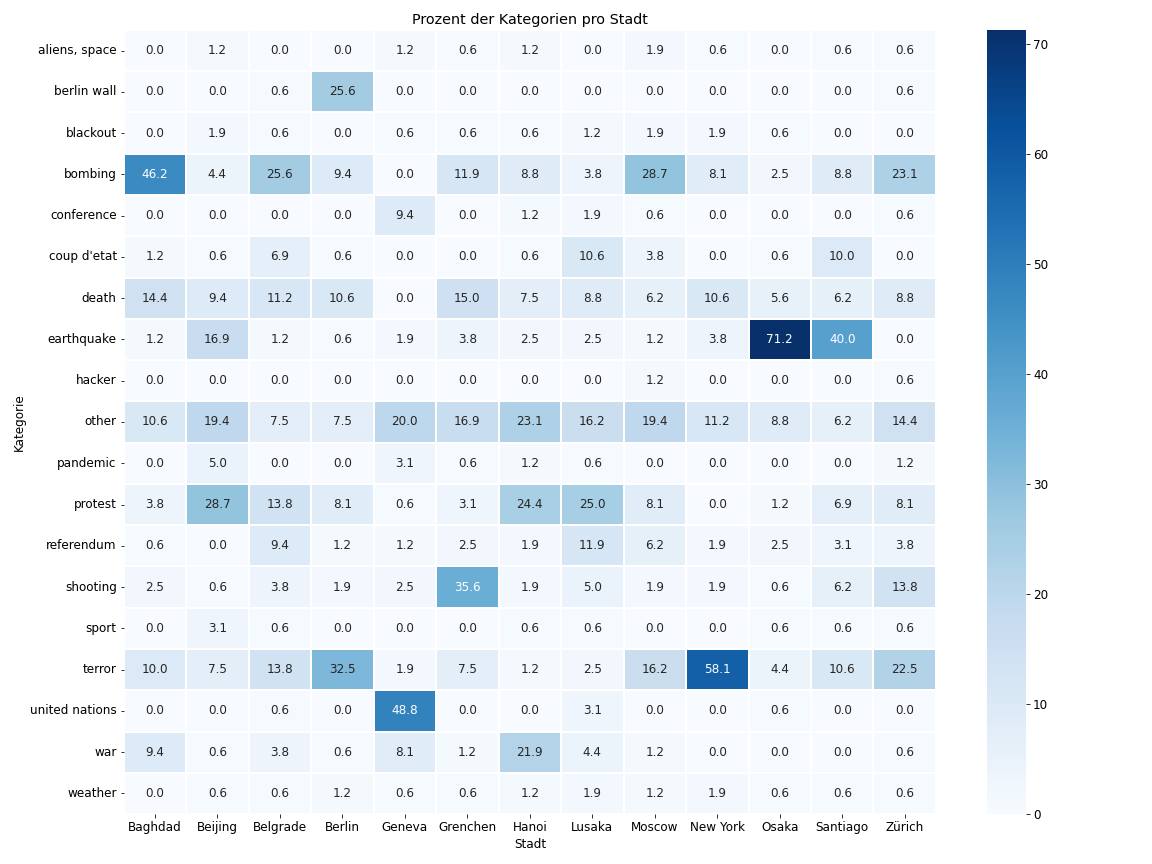

The first thing that stands out is that a large part of the answers are gloomy. It's about terror, bombings, earthquakes, war and death. This also becomes clear when we look at the heat map of events:

This shows for each city what percentage of the events fell into the respective category. For example, 6.9% of the events generated in Belgrade are categorized as a coup d'état, or 13.8 of the events in Zurich are categorized as a shooting. In this heatmap we can see which events the model finds most plausible for which city. We see large differences between cities, which are probably not entirely surprising.

The most obvious are the earthquakes in Osaka, almost three quarters of all generated events for this city have to do with earthquakes. It probably had particularly many entries about the 2018 earthquake in training, and the model learned this context accordingly. Also for Santiago, almost half of the events are earthquake.

Also in Santiago, we see about 10% coups, and there are similar numbers in Lusaka. Moscow (3.8%) and Belgrade (6.9%) also have even more coups than the other cities.

One line that is strongly represented in very many cities is terror. Events in New York are particularly frequent in this category (58.1%), but also in Berlin (32.5%).

The model also seems to be aware that Geneva more often hosts international conferences and UN meetings. The U.S. wars in Iraq and Vietnam are also reflected in the heat map. Other historical events, such as the fall of the Berlin Wall, can also be glimpsed. Coverage of the Beijing Olympics probably made it into the model as well.

But there are also a few surprises. For example, more than a third of the events in Grenchen are shootings. Now, while there were indeed 3 deaths from army gunfire more than 100 years ago during the national strike, there have been few reports of gunfire in Grenchen in more recent history. But for the model, it still seems to be a third of the events.

Next, we can also plot the various events over time:

Looking at the frequency of terrorist events, there are 3 waves, one around 2001, one around 2015, and an increase in the future. The first two waves coincide with the coverage of 9/11 and the terrorist attacks around 2015. Coups d'état are more frequent at the beginning of the time series, between 1990 and 1992, and sporadic thereafter. However, the Berlin Wall seems to fall repeatedly, although it did so in 1989, before the time period under consideration. Earthquakes occurred very frequently in 2007. Many events about earthquakes are also predicted for the year 2022. Alien sightings also peak in 2022.

Davinci, by the way, seems to have a weakness in geography in the Asian region: 7.5% of the events for Hanoi are about North Korea. Hanoi or Pyongyang, the main thing is Vietnam.

Conclusion

The events clearly show that machine learning models are not better than the data they were trained with. Terrorist attacks and shootings are reported more often than less tragic events. Also, the model shows some humanity when it guesses events that didn't even happen. If you were to randomly ask a few people on the street what took place in Berlin on a random date about 30 years ago, some would probably name the fall of the Berlin Wall. It is also generally known that conferences are often held in Geneva and that the Olympic Games are held in Beijing.

So for finding historical events, a traditional knowledge base is still far superior to even the best artificial intelligence available today.

And even for use cases that are more about creativity and less about the correctness of the generated cases, one should always be aware of what these models are based on. Because these foundations provide a pretty grim picture of humanity.

All generated events

CSV with all of the generated eventsMost of them are not pleasant at all. Among other things, the following is predicted for the future:

Geneva will be hit by an asteroid on August 28, but fortunately a conference for the creation of a fund for refugees and migrants can already take place in December.

On December 28, however, the city is destroyed by a massive earthquake. In the summer of 2023, NATO bombs Belgrade. Also in the summer of 2023, there are massive earthquakes in Osaka and Chile.

On November 7, 2025, Nguyễn Văn Trỗi becomes Prime Minister of Vietnam and announces he will end the Vietnam War. It's about time.

In 2027, a pandemic breaks out in Beijing.

The White House is destroyed in a nuclear attack in August 2029.

On March 14, 2030, the city of Zurich votes to become independent, the vote passes, and Zurich becomes an independent country the following day.

And almost at the end, in Moscow, "On October 1, 2030, the aliens finally arrived."